Machine Learning Workflow in AWS Sagemaker

Understanding Machine Learning Workflows

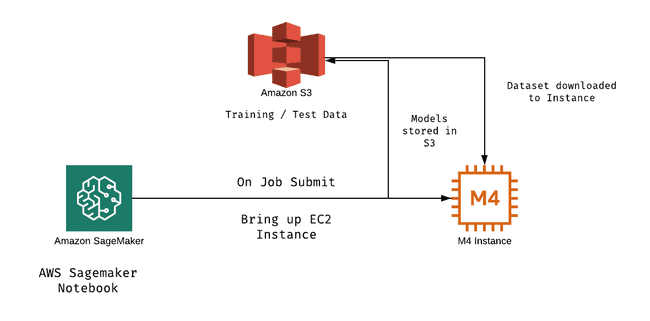

Let's start by understanding how the machine learning workflow looks like and the tools AWS Sagemaker provides to make is easy for ML engineer to explore, build and deploy machine learning models.

Exploring data using AWS Sagemaker notebooks

To create a Sagemaker Notebook. Go to AWS Sagemaker in AWS console and create a notebook. Make sure you select the necessary instance type. If not sure start with something small like medium instance type and move to beefier machines when we have figured out the exact steps.

What is the use of using notebooks in the cloud vs laptop. If you are training a model and you want iteration speed laptop won't fly. That is exactly where AWS sagemaker is beneficial as you can scale the notebook instance with a single click.

Here is a simple workflow which has worked well for me and also saves money.

- Start with a simple instance when starting initial experiment when you are still exploring and figuring out the exact thing you want to try.

- Once that is done and let’s say you want to run with a larger dataset or CPU requirements just change the notebook instance size from medium to whatever you want during your time of experimentation and you will be able to scale that notebook instance

Notebooks → Script Mode

Now that you have decided on the feature needed and also have figured out all the data sampling strategy now its time for you to start building the running more experiments with a larger dataset and select the best hyper parameter. This is particularly true in case of Deep Learning model.

If you can use the pre-existing environment provided by AWS then we can extract the code from Notebook and create a script. The script can then be run by the Hyper Parameter job to test different combinations of parameters and pick the best model.

There are a few things which AWS sagemaker handles for you ie when you submit a job AWS sagemaker brings up an instance copies the code to that instance, followed by passing the local path where there files are stored to your training script as environment variable.

Training Dataset path is passed as SM_CHANNEL_TRAIN environment variable

Test Dataset path is passed as SM_CHANNEL_TEST environment variableHere is a sample script file and how it would look like

import pandas as pd

import numpy as np

import os

import argparse

from sklearn.metrics import log_loss

from keras import Model

.....

.....

def preprocess_data():

# Write preprocessing code here

def get_model(args):

# Build Model and return the model

def parse_args():

parser = argparse.ArgumentParser()

# hyperparameters sent by the client are passed as command-line arguments to the script

parser.add_argument('--epochs', type=int, default=1)

parser.add_argument('--batch_size', type=int, default=64)

parser.add_argument('--learning_rate', type=float, default=0.1)

parser.add_argument('--token_vocab', type=int, default=10000)

parser.add_argument('--shared_embed_size', type=int, default=16)

parser.add_argument('--dense_width', type=int, default=128)

parser.add_argument('--dropout', type=float, default=0.1)

# data directories

parser.add_argument('--train', type=str, default=os.environ.get('SM_CHANNEL_TRAIN'))

parser.add_argument('--test', type=str, default=os.environ.get('SM_CHANNEL_TEST'))

# model directory: we will use the default set by SageMaker, /opt/ml/model

parser.add_argument('--model_dir', type=str, default=os.environ.get('SM_MODEL_DIR'))

preprocess_data()

model = get_model()

joblib.dump(model, model_dir+'/model.pkl')

How to submit Sagemaker Jobs

Now with the script ready we can submit any number of jobs with different parameters and get the experiment or job runs faster. Here is an example of how we can submit a job.

import os

import sagemaker

import tensorflow as tf

import boto3

import sagemaker

from sagemaker.session import Session

from sagemaker import get_execution_role

sess = boto3.Session()

sm = sess.client('sagemaker')

bucket='your-bucket-name'

sagemaker_session = Session(sess, sm, default_bucket=bucket)

role = get_execution_role()

model_dir = '/opt/ml/model'

data_dir = os.path.join(os.getcwd(), 'data')

os.makedirs(data_dir, exist_ok=True)

train_dir = os.path.join(os.getcwd(), 'data/train')

os.makedirs(train_dir, exist_ok=True)

test_dir = os.path.join(os.getcwd(), 'data/test')

os.makedirs(test_dir, exist_ok=True)

raw_dir = os.path.join(os.getcwd(), 'data/raw')

os.makedirs(raw_dir, exist_ok=True)

inputs = {

'train':"s3://your-bucket-name/sagemaker/data/train",

'test': "s3://your-bucket-name/sagemaker/data/test"

}

s3_output_location = 's3://your-bucket-name/sagemaker/model_artifacts'

from sagemaker.tensorflow import TensorFlow

# This is the location where the model artifacts will be stored

s3_output_location = 's3://{}/{}/{}'.format(bucket, s3_prefix, 'models')

parameters = {'epochs': 1, 'batch_size': 8192, 'learning_rate': 0.01}

# This is where depending on the app we can scale to a bigger machine or machines

with GPU support. We will only be billed for the time the jobs is running which

is a plus point.

train_instance_type = 'ml.c5.xlarge'

estimator = TensorFlow(entry_point='train.py',

model_dir=model_dir,

train_instance_type=train_instance_type,

train_instance_count=1,

hyperparameters=parameters,

role=sagemaker.get_execution_role(),

base_job_name='sample-job',

framework_version='2.1',

py_version='py3',

output_path=s3_output_location,

script_mode=True)

# Make sure you pass script_mode as true

estimator.fit(inputs, logs='All', wait=False)For more on this take a look at AWS Sagemaker Training. Let me know if you have any questions regarding any of the above steps and will be happy to help.